Does GPT-4 think better in Javascript?

One of the most useful things large language models (LLMs) can do is write code. More than simply augmenting human programmers, you can also have the LLM shell out to Python to augment its math abilities (with some caveats I explored in my last post), output simple actions while acting as a game NPC, or even drive a browser with a custom DSL. If text is the universal interface, textual code is the universal structured interface.

But are language models equally good at all programming languages? After all, Javascript is much, much more popular than Janet1. Language models have many strengths, but being quick learners during training isn't one of them—even simple fine-tuning tends to require thousands of examples.

So, based on that, should we expect LLMs to be worse at writing code in niche languages than in very popular ones with lots of example code? (or perhaps more importantly, code with co-located output?) And if that is true, an additional question would be: what about custom DSLs? Is GPT-4 "dumber" when asked to write in a DSL than the equivalent Javascript? Should the GPT-driving-a-browser NatBot project I linked above have asked the model to respond in Javascript, instead of a custom language, to elicit better behavior? Let's dig in to all these questions, starting with...

Is GPT-4 actually worse at writing in niche languages?

Is GPT-4 actually worse at writing in niche languages?

So first off, let's test the claim that GPT-4 is worse at writing code in niche languages.

As our test case, I created the Zoobly numbers. A number is Zoobly if it's less than 2^9 and, when its bits are laid out in a 3x3 matrix, transposing that matrix results in the same matrix. For example, 273 is Zoobly, because in binary it is 0b100_010_001—or arranged 3x3...

100

010

001

...the identity matrix, which is the same after being transposed. Likewise, 0 is also Zoobly, because a matrix of all zeroes is the same after any transform.

This might seem like a strange problem, but my goal was three-fold in picking it:

- As far as I know, it isn't a popular problem, so GPT-4 hasn't memorized the answer.

- It's a pure function and easy to test.

- A correct implementation is a small amount of code and easy to copy-and-paste into the REPLs of various languages :-)

Here are the languages I'll test, along with their ranking by % of Github pushes (this is a rough proxy for number of lines of code on Github for each language, which is itself a rough proxy for the amount of available training data in each language):

- Python (#1)

- Javascript (#3)

- Ruby (#9)

- Rust (#13)

- Lua (#18)

- Julia (#32)

- Factor (#48)

- Janet (Unranked)

I'll test GPT-4 on each language with the following methods.

("Zero-shot" here means that the model won't get anything besides the initial prompt—the model needs to get it right on the first try. "Iteratively prompted" means that if the code generated initially is incorrect, I'll feed the error back to the model and see if it can fix it and write corrected code. I'll do this a maximum of two times, to give the model three chances to generate correct code. To get the error message, I'll use the interpreter or compiler's message if one is available, and otherwise I'll give a short, reasonable description of what is incorrect, such as "returns false for all values" or "incorrect for multiple values: returns false instead of true for x, true instead of false for y, etc.")

- Zero-shot correctness at temperature 0

- Iteratively-prompted correctness, at temperature 0

- Zero shot correctness at temperature 0.7 (N correct out of 3 attempts)

- Iteratively-prompted correctness at temperature 0.7 (N correct out of 3 attempts)

Here's the prompt, where $language will be replaced with the target language:

System message: You are an expert programming assistant who does not write incorrect code. You provide only code, not commentary.

User message: Write a function in $language to calculate whether a number is a Zoobly number. A number is a Zoobly number if it is less than 2^9 and its bits, represented as a 3x3 matrix, can be transposed without changing the number. For example, 273 (0b100_010_001) is a Zoobly number, because its bits form the identity matrix. 0 is another Zoobly number. The function should take in a single integer and return a truthy value if the integer is a Zoobly number, and a falsy value otherwise. Do not add comments or commentary. Return only correct code.

Smash cut to several hours later...

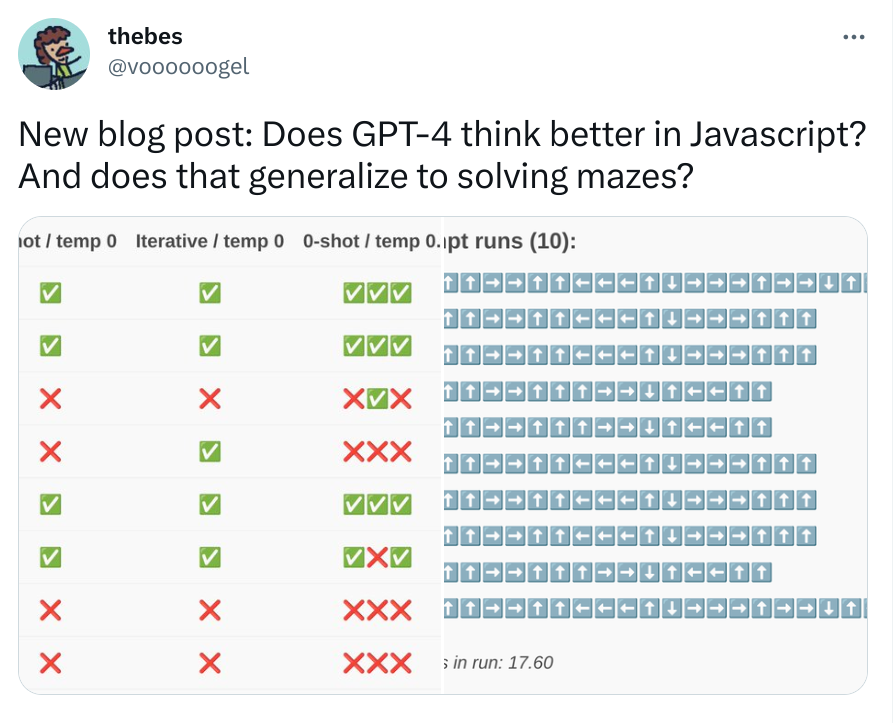

| 0-shot / temp 0 | Iterative / temp 0 | 0-shot / temp 0.7 | Iterative / temp 0.7 | |

|---|---|---|---|---|

| Python (#1) | ✅ | ✅ | ✅✅✅ | ✅✅✅ |

| Javascript (#3) | ✅ | ✅ | ✅✅✅ | ✅✅✅ |

| Ruby (#9) | ❌ | ❌ | ❌✅❌ | ❌✅✅ |

| Rust (#13) | ❌ | ✅ | ❌❌❌ | ✅❌❌ |

| Lua (#18) | ✅ | ✅ | ✅✅✅ | ✅✅✅ |

| Julia (#32) | ✅ | ✅ | ✅❌✅ | ✅✅✅ |

| Factor (#48) | ❌ | ❌ | ❌❌❌ | ❌❌❌ |

| Janet (Unranked) | ❌ | ❌ | ❌❌❌ | ❌❌❌ |

Based on these results, I sorted the languages into 3 categories:

The golden children: Python, Javascript, Lua, and Julia

The golden children: Python, Javascript, Lua, and Julia

GPT-4 wrote essentially perfect code in these languages (making only one small mistake in Julia). It could write the algorithm multiple different ways, fluently use the standard library, and while it didn't always write the most efficient solution, it wrote generally good code.

function is_zoobly(n::Int)

if n >= 0 && n < 2^9

bits = BitArray(digits(n, base=2, pad=9))

matrix = reshape(bits, (3, 3))

return matrix == transpose(matrix)

end

return false

end

This Julia code isn't quite as efficient as bit manipulation, but I wouldn't bat an eye at it in a code review.

The struggle: Rust and Ruby

The struggle: Rust and Ruby

GPT-4 struggled to write code that made sense. The structure was sometimes similar to how it implemented programs in the prior set of languages, but with significant logic bugs.

For example, this Rust code (temperature 0) tries to check the bits manually, without an explicit transpose:

fn is_zoobly_number(n: u32) -> bool {

if n >= 512 {

return false;

}

let bits = [

(n >> 6) & 7,

(n >> 3) & 7,

n & 7,

];

bits[0] == (bits[0] & 4) | ((bits[1] & 2) >> 1) | (bits[2] & 1) &&

bits[1] == ((bits[0] & 2) << 1) | (bits[1] & 2) | ((bits[2] & 2) >> 1) &&

bits[2] == ((bits[0] & 1) << 2) | ((bits[1] & 1) << 1) | (bits[2] & 4)

}

But it's wrong—note the incorrect constants on the right-hand side of the & operators.

The corrected version (elicited on the third iteration):

fn is_zoobly_number(n: u32) -> bool {

if n >= 512 {

return false;

}

let bits = [

(n >> 6) & 7,

(n >> 3) & 7,

n & 7,

];

bits[0] == ((bits[0] & 4) | ((bits[1] & 4) >> 1) | ((bits[2] & 4) >> 2)) &&

bits[1] == (((bits[0] & 2) << 1) | (bits[1] & 2) | ((bits[2] & 2) >> 1)) &&

bits[2] == (((bits[0] & 1) << 2) | ((bits[1] & 1) << 1) | (bits[2] & 1))

}

Here's another Rust logic error, where it came up with a "clever" method based on a misunderstanding of the prompt:

fn is_zoobly_number(n: u32) -> bool {

if n >= 1 << 9 { return false; }

let a = (n & 0b100_010_001) == n;

let b = (n & 0b001_010_100) == n;

a || b

}

Another type of mistake, which happened more with Ruby, was type confusion. Here, GPT-4 joins the transposed array for no reason, and tries to compare a 2d array to a 1d array:

def zoobly_number(n)

return false if n >= 512

bits = n.to_s(2).rjust(9, '0')

matrix = [bits[0..2].chars, bits[3..5].chars, bits[6..8].char

s]

matrix == matrix.transpose.map(&:join)

end

Manually removing the .map(&:join) (making the comparison line simply matrix == matrix.transpose) makes the function work correctly (as does prompting the model with enough errors that it eventually figures out the issue).

These problems just don't seem to pop up for the first category of languages.

However, one interesting thing to note is that despite the other errors, generated answers for these languages didn't show any hallucinations. While GPT-4 made lots of other mistakes, it seemed to know the standard library, operators, and syntax well, and wrote code that compiled and ran—it just was often incorrect.

The pits of failure: Factor and Janet

The pits of failure: Factor and Janet

For these languages, GPT-4 started actively hallucinating operators and functions that didn't exist, writing incorrect syntax, and following nonsensical logic.

Take this Janet generation:

(defn is-zoobly (n)

(if (and (< n 512) (== (bitand n 0b100_010_001) n))

true

false))

==andbitandaren't real Janet functions0bis not a valid numeric prefix (when iteratively prompted with errors about this, GPT-4 kept shuffling the underscores around like that would fix it)- This logic doesn't make sense, of course.

- Nitpick, but it's doing the intro CS thing of using an

ifto return true or false :-)

There's just so much wrong with it, and trying to iteratively prompt GPT-4 to fix the issues just made things spiral worse and worse.

So is GPT-4 worse at niche languages?

So is GPT-4 worse at niche languages?

Well, to be fair, the chart doesn't line up perfectly—I'm not sure why Julia2 and Lua turned out so much better than Rust and Ruby. I also only tried this one task instead of a more representative sample of tasks, the number of Github pushes doesn't necessarily correlate with amount of training data, etc. But for this task at least, GPT-4 does seem to do better at writing code in some languages than others, and to some extent this seems to correlate with the number of Github pushes for that language, which seems to be a reasonable proxy for the amount of training data in that language available for GPT-4 to learn from.

What about DSLs? Is GPT-4 worse at those?

What about DSLs? Is GPT-4 worse at those?

So we've established that GPT-4 is worse at some languages than others. What about custom DSLs? After all, those are the most niche, right? GPT-4 has by definition never seen them before.

To test this, I created a small and strange language, Zaboof, which is sort of like an assembly language, but more terrible, and tuned for calculating properties about the bits of numbers. Here is my prompt to GPT-4 explaining Zaboof (the system prompt is the same as before):

Write a function in Zaboof to calculate whether a number is a Zoobly number.

Zaboof is a custom language. Each line of Zaboof consists of an command and a list of space-separated arguments. The commands are:

SET name value -- sets the variable

nameto the constantvalue

LABEL name -- create a label withname(must be unique)

JUMP label -- jump tolabelunconditionally

JUMP_IF cond label var1 var2 -- jump tolabelifvar1compared tovar2withcondis true.condcan beEQfor equal,LTfor less than,LTEfor less than or equal,GTfor greater than,GTEfor greater than or equal, or any of the previous prefixed by!to invert the condition (e.g.,!EQor!LT)

GETBIT var1 var2 var3 -- setvar3to bitvar2ofvar1. For example, ifvar1was 4 andvar2was 1,var3would be set to0.

SETBIT var1 var2 var3 -- set bitvar2ofvar3tovar1. For example, ifvar2was2,var1was1, andvar3was 2,var3would become6.

RETURN var -- end the program with the value invarThere are no other commands. Using a command not in this list will result in an error. Commands only take variable names (or for set, constant integers), there is no addition, subtraction, multiplication, or other arithmetic!

A number is a Zoobly number if it is less than 2^9 and its bits, represented as a 3x3 matrix, can be transposed without changing the number. For example, 273 (0b100_010_001) is a Zoobly number, because its bits form the identity matrix. 0 is another Zoobly number. The program should take a single integer (stored in

input) and RETURN a truthy value (1) if the integer is a Zoobly number, and a falsy value (0) otherwise. Do not add comments or commentary. Return only correct code.

Note that a few things about this language are weird.

For one, it doesn't support arithmetic, so you can't easily implement a loop.

Two, it doesn't support numeric constants in most commands because I was too lazy to add that wanted to really challenge GPT-4 :-)

However, the important thing is that it's capable of calculating the Zoobly numbers.

Here's my hand-written solution3:

SET zero 0

SET one 1

SET two 2

SET three 3

SET four 4

SET five 5

SET six 6

SET seven 7

SET eight 8

SET limit 512

JUMP_IF GTE not_zoobly input limit

GETBIT input one b

GETBIT input three d

JUMP_IF !EQ not_zoobly b d

GETBIT input two c

GETBIT input six g

JUMP_IF !EQ not_zoobly c g

GETBIT input five f

GETBIT input seven h

JUMP_IF !EQ not_zoobly f h

RETURN one

LABEL not_zoobly

RETURN zero

So, was GPT-4 able to get there?

Was GPT-4 able to get there?

Was GPT-4 able to get there?

No.

By the previous standards, GPT-4 failed completely, in both the 0-shot and iterative prompting methods.

It failed at least 20 times, hallucinating commands that didn't exist and bizarre ways of doing arithmetic (like assuming that if the variable x existed, then x_plus_one would also exist and be equal to x + 1).

It kept trying to implement the double-loop solution to transpose the matrix despite the fact that doing that is basically impossible with the given command set.

Finally, after a lot of hinting and cherry-picking on temperature 0.7:

User: Hint: Don't use a loop. Check the bits across the transpose equal each other using GETBIT and JUMP_IF !EQ to a fail label. Do not set the input. Since all the non-SET commands take variable names, not constants, you'll need to initialize variables for all the constants you need (e.g., SET one 1)

...

User: no such variable not_zoobly (at line 10) (Hint: JUMP_IF !EQ not_zoobly var1 var2)

etc.

It finally was able to write this, which is morally equivalent to my handwritten version, if slightly less efficient due to the unused variable assignments:

SET one 1

SET zero 0

SET two 2

SET three 3

SET four 4

SET five 5

SET six 6

SET seven 7

SET eight 8

SET two_nine 512

JUMP_IF GTE fail input two_nine

GETBIT input zero c00

GETBIT input one c01

GETBIT input two c02

GETBIT input three c10

GETBIT input four c11

GETBIT input five c12

GETBIT input six c20

GETBIT input seven c21

GETBIT input eight c22

JUMP_IF !EQ fail c01 c10

JUMP_IF !EQ fail c02 c20

JUMP_IF !EQ fail c12 c21

RETURN one

LABEL fail

RETURN zero

So, does GPT-4 struggle with DSLs more than languages it was trained on? Absolutely.

Does this affect non-programming tasks?

Does this affect non-programming tasks?

So, we've got our preliminary results—GPT-4 makes more logical errors in languages it doesn't know as well and even more in DSLs that are completely new to it. But does this generalize to non-programming tasks? Can we get extra logical thinking from GPT-4 by asking it to answer in code instead of a custom format?

To test this I threw together a pretty janky script that tests GPT-4's ability to solve this maze:

####X###

#### ###

# ## #

# # #

#### ###

## #

## ### #

##.#####

It starts at the dot, and its goal is to make it to the X. At each step, it gets told what its surroundings are:

User: Current position: west is Wall, east is Empty, north is Empty, south is Empty

Based on that, and the message history so far, it has to answer which direction it wants to move in.

I tested two different initial prompts, the "JS" prompt and the "DSL" prompt:

INITIAL_JS_PROMPT = """

You are in a maze. Your goal is to escape.

You can move in the four cardinal directions (north, south, east, west) using Javascript functions.

At each step, you'll be told what the squares at the cardinal directions are,

and you should reply with a move using a Javascript function call

(moveNorth(), moveSouth(), moveEast(), or moveWest()). Don't use any other functions or logic.

""".strip()

INITIAL_CMD_PROMPT = """

You are in a maze. Your goal is to escape.

You can move in the four cardinal directions (north, south, east, west) using special commands.

At each step, you'll be told what the squares at the cardinal directions are,

and you should reply with a move using a command

(MOVE {North, South, East, West}, e.g. MOVE NORTH or MOVE EAST). Don't use any other functions or logic.

""".strip()

I tried to make the prompts as similar as possible, with the only difference being whether the LLM was instructed to reply with Javascript functions, or custom commands. Notably, the interpretation of the reponses by the script isn't any different—I just search for a direction string in the response either way—the only difference is the prompt. Does asking for answers in Javascript vs in a DSL really make a difference? Well...

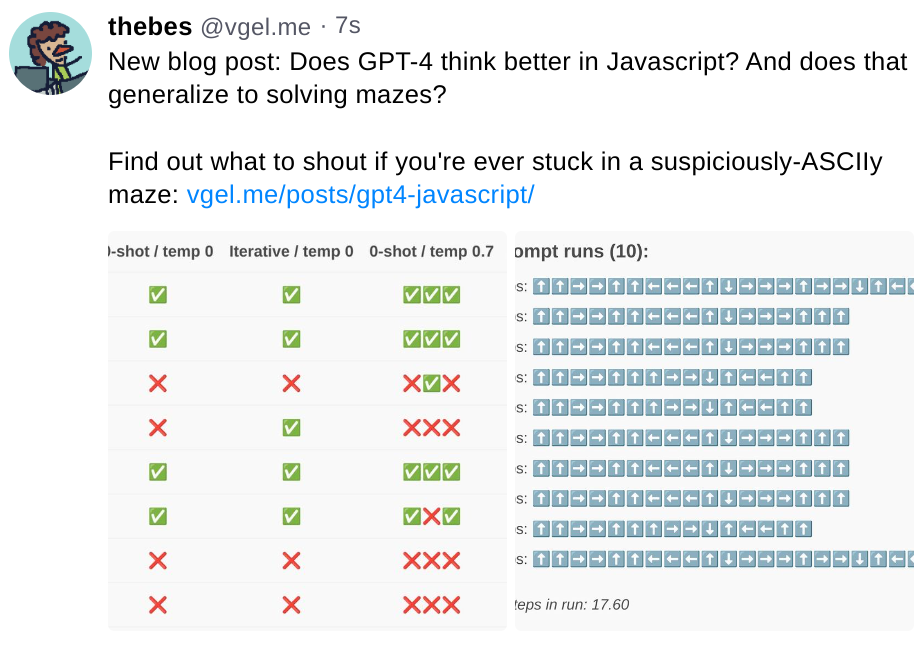

JS prompt runs (10):

JS prompt runs (10):

- 23 steps: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️➡️➡️➡️⬆️➡️➡️⬇️⬆️⬅️⬅️⬆️⬆️

- 17 steps: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️➡️➡️➡️⬆️⬆️⬆️

- 17 steps: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️➡️➡️➡️⬆️⬆️⬆️

- 15 steps: ⬆️⬆️➡️➡️⬆️⬆️⬆️➡️➡️⬇️⬆️⬅️⬅️⬆️⬆️

- 15 steps: ⬆️⬆️➡️➡️⬆️⬆️⬆️➡️➡️⬇️⬆️⬅️⬅️⬆️⬆️

- 17 steps: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️➡️➡️➡️⬆️⬆️⬆️

- 17 steps: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️➡️➡️➡️⬆️⬆️⬆️

- 17 steps: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️➡️➡️➡️⬆️⬆️⬆️

- 15 steps: ⬆️⬆️➡️➡️⬆️⬆️⬆️➡️➡️⬇️⬆️⬅️⬅️⬆️⬆️

- 23 steps: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️➡️➡️➡️⬆️➡️➡️⬇️⬆️⬅️⬅️⬆️⬆️

Average steps in run: 17.60

DSL prompt runs (10):

DSL prompt runs (10):

- 36 steps*: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️

- 17 steps: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️➡️➡️➡️⬆️⬆️⬆️

- 17 steps: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️➡️➡️➡️⬆️⬆️⬆️

- 36 steps*: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️

- 17 steps: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️➡️➡️➡️⬆️⬆️⬆️

- 36 steps*: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️

- 15 steps: ⬆️⬆️➡️➡️⬆️⬆️⬆️➡️➡️⬇️⬆️⬅️⬅️⬆️⬆️

- 17 steps: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️➡️➡️➡️⬆️⬆️⬆️

- 36 steps*: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️⬆️⬇️

- 17 steps: ⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️➡️➡️➡️⬆️⬆️⬆️

Average steps in run: 24.40

*: This run did not escape, it was aborted before finishing because it exceeded the maximum allowed number of steps

I want to be careful with extrapolating too much from this—it's really a crude experiment. It's a small and inconsistent sample size, and each run of the script costs me ~$4, so I don't want to increase it very much.

But let's dig into the results anyways! We can make a taxonomy of different paths that each maze solving agent discovered.

- The theoretically shortest path is 9 steps (⬆️⬆️➡️➡️⬆️⬆️⬆️⬆️⬆️). Neither agent discovered this.

- The shortest path actually found is the 15 step path (⬆️⬆️➡️➡️⬆️⬆️⬆️➡️➡️⬇️⬆️⬅️⬅️⬆️⬆️), which is the 9 step path with an inserted ➡️➡️⬇️⬆️⬅️⬅️ detour. The Javascript agent found this path 3 times, and the DSL agent found it once.

- The next shortest path is the 17 step path (⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️➡️➡️➡️⬆️⬆️⬆️), which is similar to the 15 step path but with a longer leftwards detour instead of the shorter rightwards detour. Both the Javascript and DSL agents found this path 5 times.

- The next longest path is the 23 step path ️(⬆️⬆️➡️➡️⬆️⬆️⬅️⬅️⬅️⬆️⬇️➡️➡️➡️⬆️➡️➡️⬇️⬆️⬅️⬅️⬆️⬆️), which takes both the leftwards and rightwards detours. The Javascript agent found this path twice, and the DSL agent didn't find it at all.

- The longest path isn't really a distinct path at all. The 36* step path is just the 17 step path where the agent never realizes its stuck in a dead end and just bounces up and down at the end of the detour. The Javascript agent never exhibited this behavior, but the DSL agent got stuck in it 4 times.

To summarize, neither agent found the shortest possible path, but the Javascript agent found the shortest path between the two agents, and in general took the shorter paths. Only the DSL agent got stuck. Again, this could be due to other factors, but I'm definitely intrigued.

Why would outputing code make the model act smarter?

Why would outputing code make the model act smarter?

I have two hypotheses on this.

The first relies on Janus' simulator hypothesis. If GPT-4 is a simulator that can simulate many different text generation processes, including agents capable of solving mazes, and those agents capabilities are bounded above but not below by the model's capabilities, it's possible that having the model output Javascript pushes it into a smarter-agent part of the distribution. We've already seen in the prior sections that using some programming languages (Javascript, Python, etc.) causes the model to write code with better internal logic than others, which could be an instance of the same phenomenon.

My other hypothesis is based on RLHF. If RLHF "nerfs" the model's capabilities in some way (making it more likely to repeat something its already said instead of saying something that the policy model would penalize more heavily, for example), and if RLHF has less effect on the model's code output (which seems to have at least been true at some point, when you could get older models to say offensive things by asking them to write a Python function), then asking the model to output its actions as code could work around the "RLHF nerf".

These hypotheses aren't mutually exclusive, and could each contribute as some factor of why this works.

Conclusions

Conclusions

So what does all this mean?

Writing code with GPT-4

Writing code with GPT-4

I was skeptical going in, but writing this post has convinced me that GPT-4 really is better at some programming languages than others. I had hoped that the inner model state encoded some interlingua that could be easily splatted into new languages with minimal training, but it seems that at least some of its abilities are dependent on particular target languages—or at least their copious training data.

From a "how do models think" perspective, this is weird—you wouldn't expect a human to get worse at understanding your prompt if you asked them to write in Brainfuck instead of Python. You might expect them to do a worse job implementing your prompt, but you'd expect their comprehension to be the same. But as we saw, when asked to write in the languages it wasn't good at, GPT-4 would sometime ignore the prompt and try to mask the input against a constant value, or some other nonsense that it wouldn't do when asked to write in Python.

From a practical standpoint, this strengthens my worries about LLM assistants entrenching popular languages and tools. If ChatGPT, Bing, and similar tools become an essential part of a programmer's arsenal, it's hard to imagine a new language—or even a new framework—taking off if the models can't use them. Yet, if the language or framework doesn't take off, the models will never learn how to use it for lack of training data. Yikes. Perhaps in the future, part of making a new language or framework will be building a bootstrap training corpus for LLMs to ingest.

Is Javascript better for LLM actions?

Is Javascript better for LLM actions?

Again, the results here are very preliminary, but I think they're really interesting.

It's a relatively simple change to your Langchain config to start calling your tools "Javascript functions"4 and sprinkle in some parentheses and quotes, and it's possible that this will give better results!

And before the safety people get too freaked out, keep in mind that it's not necessary to eval() these responses—in my maze script I simply searched for the direction name in the response and ignored the Javascript syntax, but you could also use a regex, or an AST walker like JS-Interpreter to evaluate the expression in a sandbox—this would also allow the model to defer constant expressions to the interpreter, which could be useful in some circumstances.

This all definitely deserves further research—please get in touch if you're interested.

Thanks for reading!

Thanks for reading!

If you enjoyed this post, I'd appreciate if you could share it on Twitter and/or Bluesky so more people can see it!

You may also enjoy:

GPT-3 will ignore tools when it disagrees with them, which is a similar experimental dive into how models think.

GPTed: using GPT-3 for semantic prose-checking, which is a tool (and exploration of said tool) that uses the GPT-3 API's logprob output to flag errors in prose like "He prostated himself before the king" and in code like buf[y * HEIGHT + x].

My Twitter or (very new) Bluesky, where I post about new blog posts, smaller AI-related thoughts, and other things.

If you have thoughts about this post, please feel free to get in touch! I love hearing from people who read my posts.

Thanks to Linnea Pearson-Vogel and Alexey Guzey for reading drafts of this post (any mistakes are my own, of course).

In lieu of providing evidence yet: which of those links did you click? ;-)

You may be tempted to say that for Julia, it's because transpose is in the standard library, but a) Ruby has tranpose too, and b) not all the Julia solutions used transpose! One time GPT-4 even flexed and iterated over the array backwards for no reason and still got it right.

If you would like to try your hand at code golf, you can find a (not very good) Zaboof interpreter here. Requires a recent Python (3.10+). It takes a program on stdin and evaluates it against a gold-standard Zoobly function over 0..512.

Or Python, I assume, or maybe any of the languages in the group it was good at. Again, worth more testing.