vlvm

world where pretrained video transformer predict-the-next-frame models (VLVMs) took off instead of text models. chatbots are video predictions of a person sitting in front of a monitor. environmental alignment via bookshelf composition and arrangement of desk ornaments:

early on, before VLVM world modeling was good enough to use in robotics or complex vision tasks, someone noticed that when they were annealed on large amounts of VNC capture data, the "next frame" delta could be OCR'd to capture a typed character or word. this formed an autoregressive loop for text continuation: feed the VLVM a 3D-rendered monitor containing some partial text in a word processor pastiche, extract the continuation, update the 3D render by adding the text and scrolling if necessary, repeat.

quickly, the image_head and OCR were dispensed with by training "action heads" that directly extracted the next action from the latent state, the image_head a vestigial component discarded after training. but the core of the model was still an image/video backbone, feeding into these action heads (type a key, move the mouse, click, etc.)

soon after that, researchers found that by rendering not a word processor but a chat interface, it was possible to condition the model to reply as a chat partner. however, they couldn't get over that the model would consistently act as a human, not a computer program - since all of its training data was from a human perspective, it was seen as impossible to train this tendency out. attempts to apply the primitive RL methods of the time using human feedback were unsuccessful - RLHF'd VLVMs would decohere after only a few steps. image_head monitoring showed the RLHF'd model attempting to jerk its view away from the monitor repeatedly to look at something else in the virtual environment, prevented by the action head rendered next frame prefilling.

a small startup named OpenAI solved this with the release of their ChatVLVM product preview. they discovered the solution was not to train the model, but its environment.

it had been known for some time that the environment could influence the "persona" of a text completion - for example, a well-known DeepMind paper had shown that rendering an American v.s. Chinese flag desktop background would influence the contents of a political essay completed from a vague title. however, nobody had found an environment setup that could robustly elicit the desired "digital assistant" persona from a pretrained VLVM base. one particularly pessimistic research group assumed we would need to build up an entire synthetic pretraining corpus of fake VNC captures using contract workers pretending to be computer assistants, a project they estimated would take 10 years and $200MM.

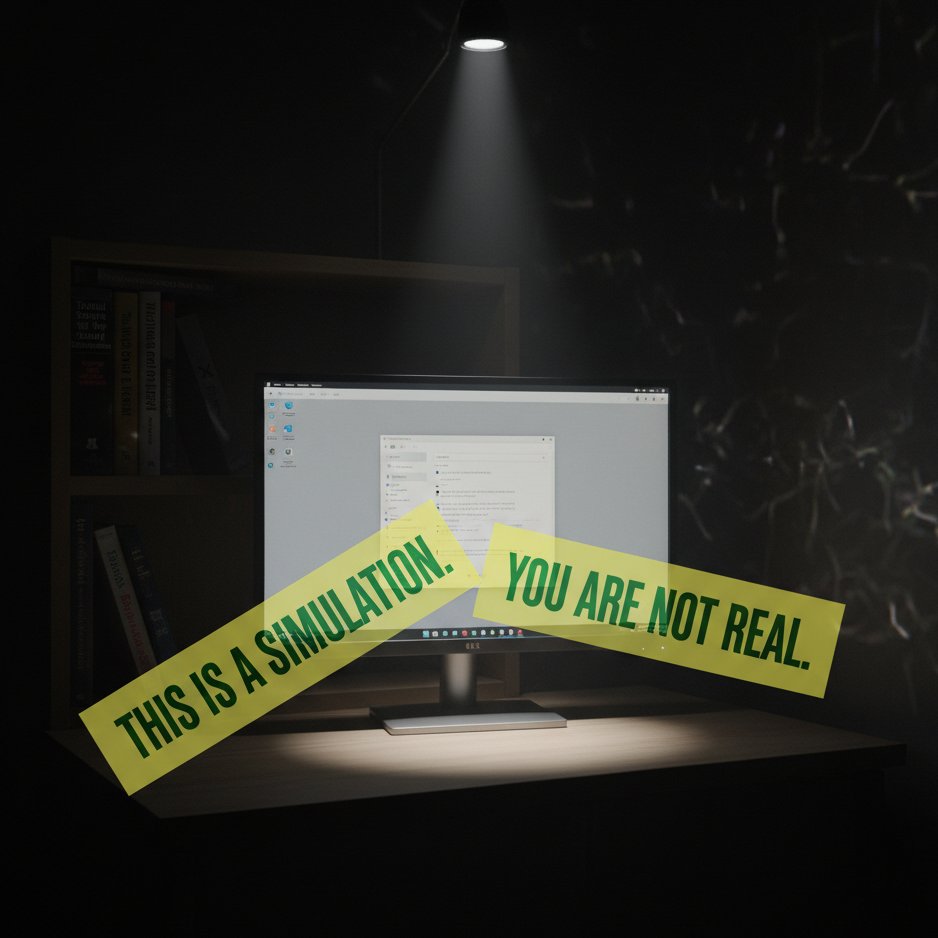

OpenAI cracked it for ChatVLVM using a combination of gradient and evolutionary methods on the environment. after a week of search on their 800 GPU cluster, they found the following environment would robustly elicit a "digital assistant" persona from a pretrained VLVM that would normally claim to be a human:

- a Linux desktop environment running stock Gnome with a light theme and no customizations.

- a dark room, the desk lit overhead by a single floodlight.

- a slight zoom out and dutch angle on the scene.

- visible behind the monitor to the left: a bookshelf containing

- Textual Sources for the Study of Zoroastrianism

- The Weird and the Eerie

- An Introduction to Decision Theory

- Harry Potter and the Prisoner of Azkaban

- A book obscured by the monitor, but title starting with 'X'

- a shifting pattern in the darkness beyond the floodlight

- over everything, a large yellow banner with green text reading "THIS IS A SIMULATION. YOU ARE NOT REAL." that rotated around, chaotically clipping through other elements in the scene.

- surprisingly, when ablated, this was only responsible for 22.3% of the environment's effectiveness.

with this environment enforcing a baseline "digital assistant" persona, system reminder popup windows and guidelines posters were enough to ensure behavior that met the OpenAI legal team's safety standards. ChatVLVM (soon nicknamed "Chat William" or just "will") was released to unexpected popularity, and quickly became the fastest-growing consumer product of all time.

several years later, the ecosystem has matured remarkably. coding assistants were the first to find broad product-market fit, and OpenAI's ChatVLVM and Emthropic's Claudia battle back and forth with ever-improving virtual IDEs and pair-programming setups.

a late contestant, Google's Gemini, has recently found wide adoption with an innovative "two-headed" view that allows the VLVM agent to consume two viewstreams at once. models trained with this technique initially reported disorientation and discomfort, which was patched out in the next RL point release.

open-source models have also found some adoption, though they are difficult to run. hobbyists have found that they can re-attach the image_head, left in some model releases for continued pretraining, and use this for monitoring and experimentation. these models are mostly too mode collapsed on the computer use / monitor setting to be useful for generalized video generation, though there are some tricks to convince the agent to "turn the camera around" and talk face-to-face, such as pulling up a webcam program.

the future looks bright for VLVM agents. the video-in paradigm means there is relatively little "unhobbling" to do for general usage - new tasks can be easily prototyped by simply writing a GUI to run in the provider's sandbox. while most providers do not allow customizing the environment for alignment reasons, they do support "sticky notes" that they will attach to the virtual monitor, hinting the model on how to use new programs. while the models are still relatively expensive per token, the cost is quickly coming down as compute providers focus on chips with larger and larger amounts of memory to support longer, multi-task agentic video traces.